Creeper Crawler

As I wrote earlier, I was confident I can collect data for the Particle Database by myself, but shortly I realized it’s just too much. So after a struggle I decided to make a web crawler for harvesting semantic data.

The decision of target platform was quick – PHP is not suitable, so .NET. At first I tried to customize some of those existing open source crawlers, but it was a pain. So ultimately I wrote my own. I don’t need any GUI, so simple console app was suitable.

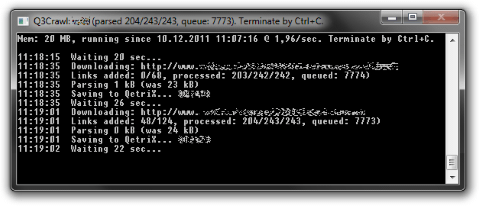

It downloads plain text data (e.g. HTML page), using HttpWebRequest class, and extracts all links to other pages. For every use I can define a set of boundaries, so links out of these boundaries are thrown away. The rest are put into queue. Robots.txt and meta tags are a part of those boundaries as well.

Then crawler prepares data for parsing – strips off all unimportant parts and replaces or deletes some parts (like whitespaces). Then it checks for presence of defined string, indicating there are some data to extract. And finally, using regular expressions, structured data are extracted and send to server-side for further processing.

On server side is PHP script, which post-processes and saves data into database, finds relevant links to another entities and stores URL of data source to avoid duplicates.

Then crawler politely waits a while before loading next URL from queue, because I don’t want to overload these servers. And... that’s it! Simple, yet powerful.